Scraping With C#

Why C# ? It has its advantages, such as:

- It is object-oriented;

- Has better integrity and interoperability;

- It is a cross-platform;

You can use HtmlAgilityPack, a poweful HTML parser written in C# to read/write DOM.

It is an agile HTML parser that builds a read/write DOM and supports plain XPATH or XSLT (No need to understand XPATH nor XSLT to use it, don’t worry…). It is a .NET code library that allows you to parse “out of the web” HTML files. The parser is very tolerant of “real world” malformed HTML. The object model is very similar to what proposes System.Xml, but for HTML documents (or streams).

There are more C# libraries or packages that have the functionality to download HTML pages, parse them, and make it possible to extract the required data from these pages. Some other C# packages are ScrapySharp and Puppeteer Sharp.

C#, and .NET in general, have all the necessary tools and libraries for you to implement your own data scraper, and especially with tools like that it is easy to quickly implement a crawler project and get the data you want.

One aspect we only briefly addressed is the different techniques to avoid getting blocked or rate limited by the server. Typically, that is the real obstacle in web scraping and not any technical limitations. Our rotating residential and mobile proxies help you to access the content for your project and avoid blocking.

Method #1

The HTTP 407 Proxy Authentication Required client error status response code indicates that the request has not been applied because it lacks valid authentication credentials for a proxy server that is between the browser and the server that can access the requested resource.

This method may avoid the need to hard code or configure proxy credentials, which may be desirable.

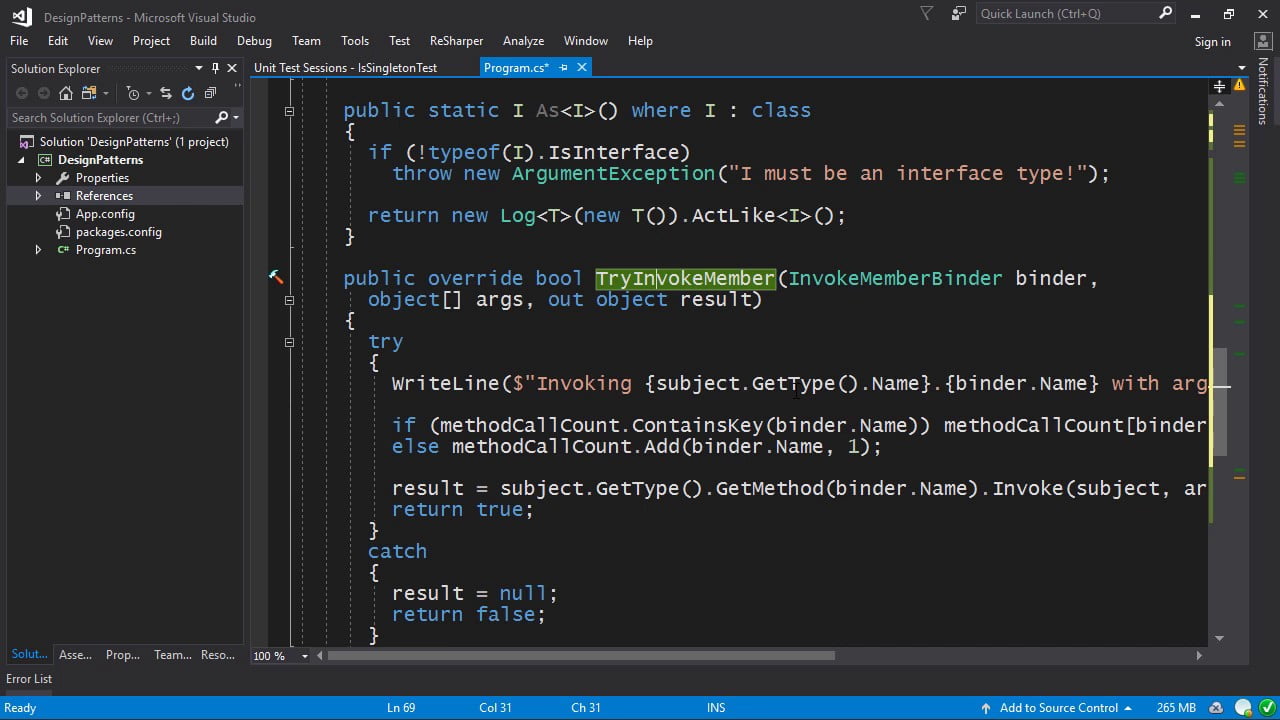

Put this in your application configuration file – probably app.config. Visual Studio will rename it to yourappname.exe.config on build, and it will end up next to your executable. If you don’t have an application configuration file, just add one using Add New Item in Visual Studio.

<?xml version="1.0" encoding="utf-8" ?>

<configuration>

<system.net>

<defaultProxy useDefaultCredentials="true" />

</system.net>

</configuration>

Method #2

HttpWebRequest request = (HttpWebRequest)WebRequest.Create(URL);

IWebProxy proxy = request.Proxy;

if (proxy != null)

{

Console.WriteLine("Proxy: {0}", proxy.GetProxy(request.RequestUri));

}

else

{

Console.WriteLine("Proxy is null; no proxy will be used");

}

WebProxy myProxy = new WebProxy();

Uri newUri = new Uri("http://192.168.10.100:9000");

// Associate the newUri object to 'myProxy' object so that new myProxy settings can be set.

myProxy.Address = newUri;

// Create a NetworkCredential object and associate it with the

// Proxy property of request object.

myProxy.Credentials = new NetworkCredential("userName", "password");

request.Proxy = myProxy;

This method can be used if you want to force your user to specify their proxy server address and port number, and type their username and password into your application, and your application to store it somewhere (either in the clear or using necessarily reversible encryption)